When it comes to AI, Everyone is Talking Their Book

One of the best ways to interrogate a claim about the future of AI is to first ask how the person making the claim might benefit

I started my career in investment banking, where I sat on a trading floor. Among many things, Wall Street trading floors are fast-paced dens of acronyms, insider lingo, and oft-repeated phrases. When you work on a trading floor during the formative years of your career, you find yourself absorbing many of the sayings.

Of the many sayings that stuck with me was, “That guy is talking his book.”

As described by The Free Dictionary, talking one’s book means:

1. The act of promoting a stock one owns in order to entice others to buy it. This would in turn benefit one's own investment portfolio. Talking one's book is not a well-regarded strategy. 2. More generally, the act of promoting one's company, product or other good or service.”

On the trading floor, that might mean that when someone says that the Federal Reserve will raise interest rates, that the dollar will depreciate against the Euro, or that they think the price of NVIDIA stock will be up over the next three months, they probably have a financial incentive for that thing to happen.

Talking your book isn’t necessarily bad. It’s quite rational, and while the phrase implies that the talker isn’t objective, it’s unclear in which direction the causation goes. Am I bullish on the price of bitcoin because I own some, or do I own some bitcoin because I’m bullish on its price? The answer is probably both.

In addition to its rationality, talking one's book is pervasive not only on Wall Street but also, as I’ve learned throughout my career, pervasive everywhere else too.

From political punditry to cultural critiques, people are typically in a position to benefit, financially or otherwise, from the future they are predicting.

EVERYONE is talking their book.

These days, this book-talking phenomenon is especially instructive when thinking about the massive hype that is generative artificial intelligence.

From doomsday prophecies that AI will kill us all to predictions that AI will usher in the singularity, the people and groups making these pronouncements are usually “talking their books.”

Here are a few examples:

In March, a group of more than 1000 technologists, executives, entrepreneurs, and academics that included Elon Musk and Apple co-founder Steve Wozniak signed an open letter calling to “immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

The letter, despite being short on details, has been controversial.

But I believe that much, though not all, of the “pause” rhetoric from the most prominent voices, is motivated by competitive pressures instead of moral clarity. Many of the profit-motivated signatories of the list see a pause as cover for them to catch up.

Elon Musk, who put a reported $50 million funding into OpenAI, the maker of ChatGPT, when it was a nonprofit, and claims that "I am the reason OpenAI exists," reeks of bitterness that he no longer has governance or financial interest in the company, now valued at close to $30 billion.

Next, when Open AI CEO Sam Altman recently gave congressional testimony on AI, he did so by preemptively calling for Congress to regulate makers of large language models:

Sam Altman: Number one, I would form a new agency that licenses any effort above a certain scale of capabilities and can take that license away and ensure compliance with safety standards. Number two, I would create a set of safety standards focused on what you said in your third hypothesis as the dangerous capability evaluations. One example that we’ve used in the past is looking to see if a model can self-replicate and sell the exfiltrate into the wild. We can give you office a long other list of the things that we think are important there, but specific tests that a model has to pass before it can be deployed into the world. And then third I would require independent audits. So not just from the company or the agency, but experts who can say the model is or is an in compliance with these stated safety thresholds and these percentages of performance on question X or Y.

Having the CEO of a private company so proactively and enthusiastically propose regulation for his own industry feels like a path toward regulatory capture.

Whatever you think about licensing requirements, they would almost certainly help OpenAI by acting as a barrier to entry for new and smaller entrants.

Largely influenced by my front-row seat to the financial crisis, I’ve long felt that regulators, to be effective, need to be antagonistic with the industries that they regulate. It’s early, but Sam Altman’s reception from Congress feels like anything but antagonism.

Many experts are fearful of this, according to the Verge:

Sarah Myers West, managing director of the AI Now Institute, tells The Verge she was suspicious of the licensing system proposed by many speakers. “I think the harm will be that we end up with some sort of superficial checkbox exercise, where companies say ‘yep, we’re licensed, we know what the harms are and can proceed with business as usual,’ but don’t face any real liability when these systems go wrong,” she said.

Other critics — particularly those running their own AI companies — stressed the potential threat to competition. “Regulation invariably favours incumbents and can stifle innovation,” Emad Mostaque, founder and CEO of Stability AI, told The Verge. Clem Delangue, CEO of AI startup Hugging Face, tweeted a similar reaction: “Requiring a license to train models would be like requiring a license to write code. IMO, it would further concentrate power in the hands of a few & drastically slow down progress, fairness & transparency.”

But what about the AI-“doomsday” crowd, those who think that AI poses an existential threat to humanity? Could they possibly benefit from predicting a literal worst-case scenario? Some think so.

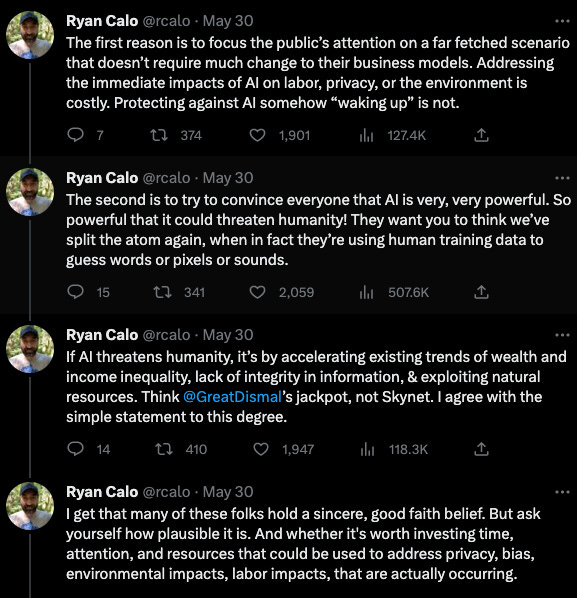

Ryan Calo, a professor at the University of Washington School of Law, recently posted a Twitter thread explaining how even the AI doomsayers might be talking their own books.

When it comes to AI, no one knows what’s going to happen. Even the most confident predictions about the future of AI are still merely educated guesses.

But those predictions don’t exist in a vacuum. In a sea of endless prognostications about the future of artificial intelligence, one of the best ways to interrogate a claim is to first ask how the person or institution, making these claims might stand to benefit from being believed.

Thoughtful and thought provoking piece. Thanks, Jamaal.